Denizenslab

Cognitive Computing in Biological and Artificial Systems @ TU Berlin

TU Berlin, MAR building

Marchstraße 23

10587 Berlin, Germany

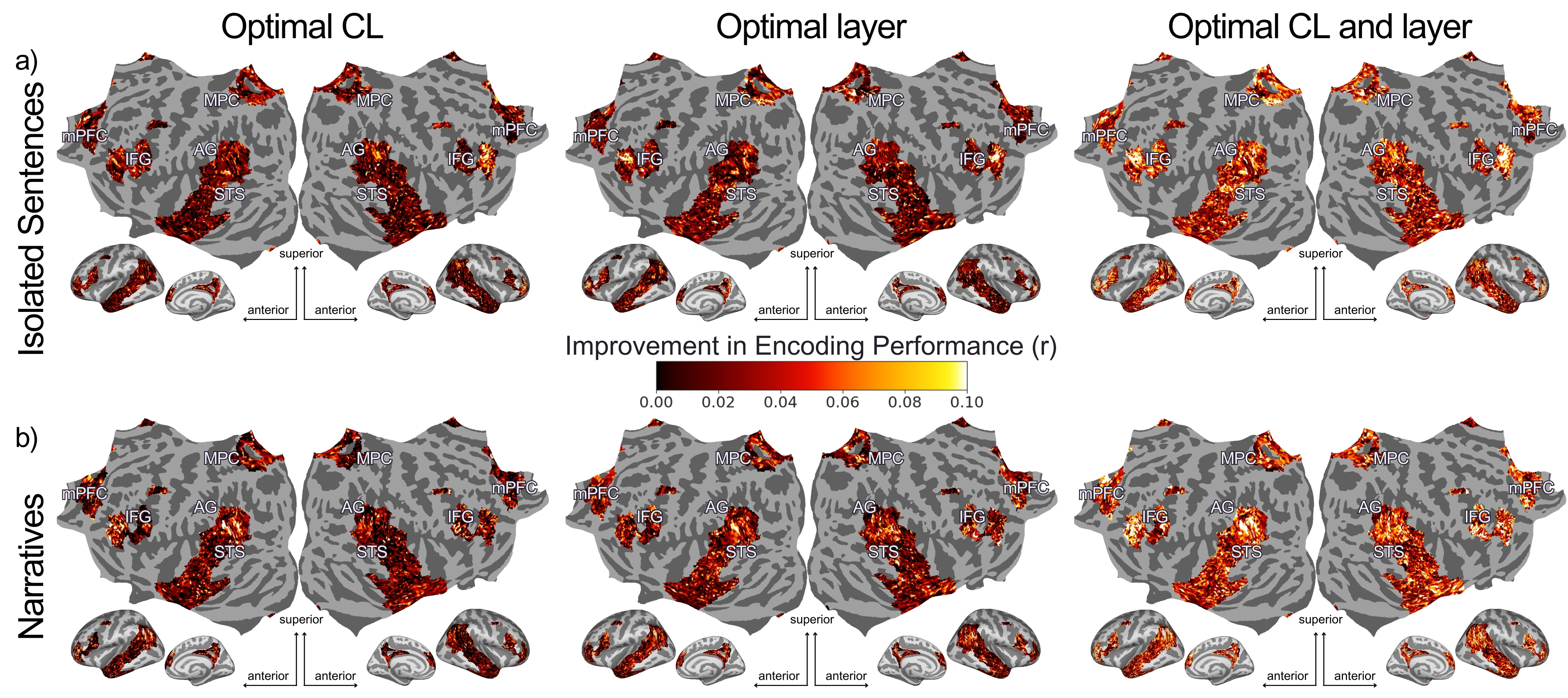

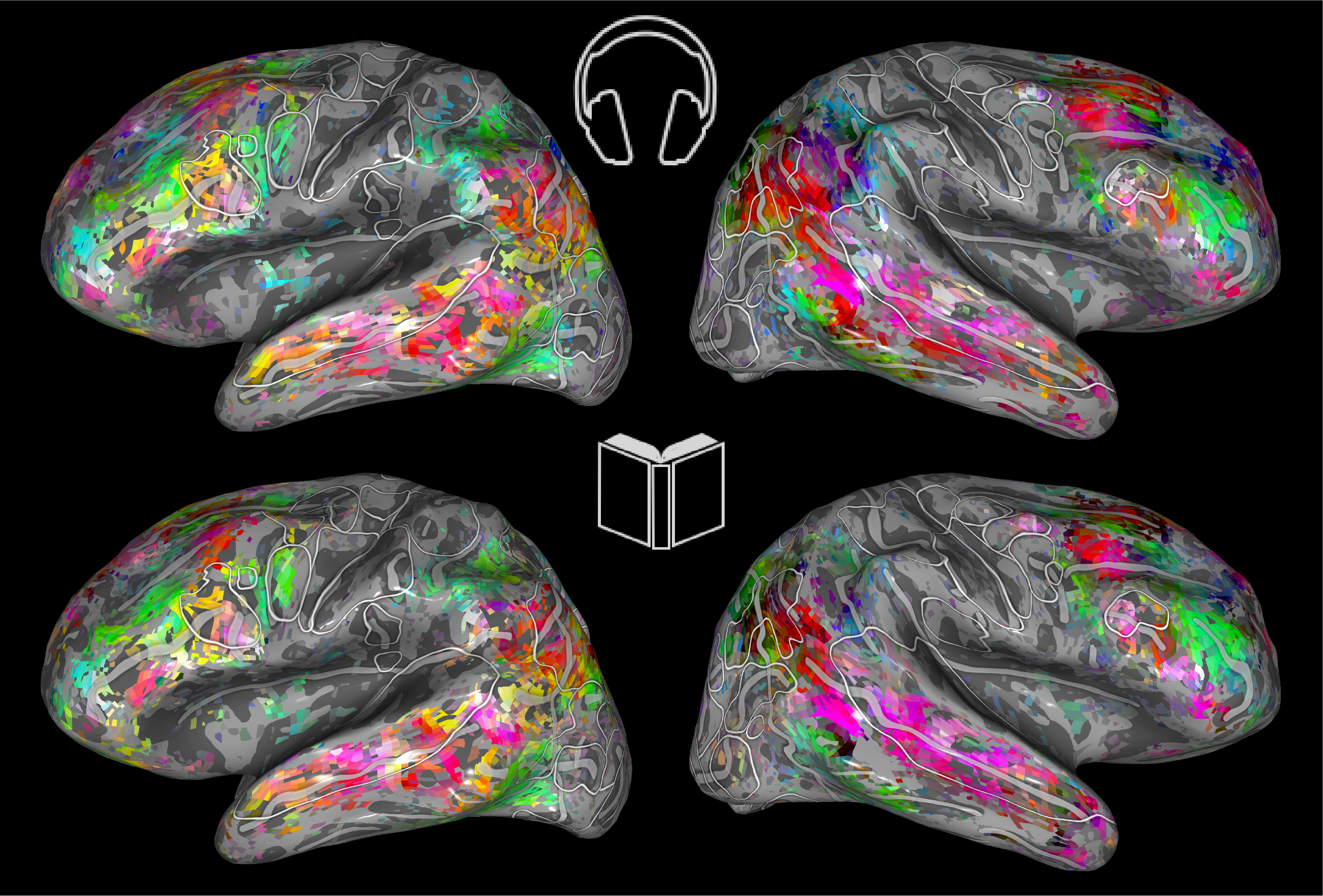

Our group’s goal is to deepen the connection between artificial intelligence, data science, and neuroscience in research and teaching. We develop computational models of human brain responses that were acquired under ecologically valid conditions. By exploring language representation, communication, and cognition in biological and artificial systems, we aim to enhance our understanding of the neural and computational bases of brain processes under ecologically valid conditions. We thereby aim to understand and optimize artificial neural language models by integrating insights from brain research to ultimately expand both the explainability of artificial systems and our understanding of the human brain.

news

| Dec 03, 2025 |

Congratulations! Our PI Fatma Deniz was today elected to be the new president of Technische Universität Berlin!

%5B1%5D.jpg)

|

|---|---|

| Nov 18, 2025 | Our paper on “Brain-Informed Fine-Tuning for Improved Multilingual Understanding in Language Models” got accepted to NeurIPS! Attend the poster presentation at NeurIPS San Diego by our PhD candidate Anuja Negi. |

| Nov 04, 2025 |

“How Brains Teach Us to Lead Change Better”, a Ted X Berlin Talk by Prof. Fatma Deniz

|

selected publications

-

Encoding models in functional magnetic resonance imaging: the Voxelwise Encoding Model frameworkSep 2025